Your Sales Reps spend hours researching prospects before every demo. Your Customer Success Managers scramble to pull together context before quarterly business reviews. Your support team answers the same questions over and over again.

What if you could delegate these tasks to AI agents that already know your business inside out?

Realm's AI agents automate complex GTM workflows by tapping into your company's knowledge base and taking action in tools your team uses every day. The best part? Building an agent with Realm takes minutes, not months, and requires zero coding.

Here's how to create your first AI agent step-by-step.

6 steps to build an AI agent with Realm

Realm's intuitive agent builder allows everyone in your team to create agents that accelerate their work by defining the agent's purpose, capabilities, and workflow through six easy steps.

1. Instructions - Write step-by-step instructions

Write clear, step-by-step instructions in natural language describing exactly what tasks your agent should do and in what order. You can reference specific documents (e.g. @Template: Business case) or tools (e.g. @Salesforce) and use variables (e.g.{{ Customer name }})) in your instructions.

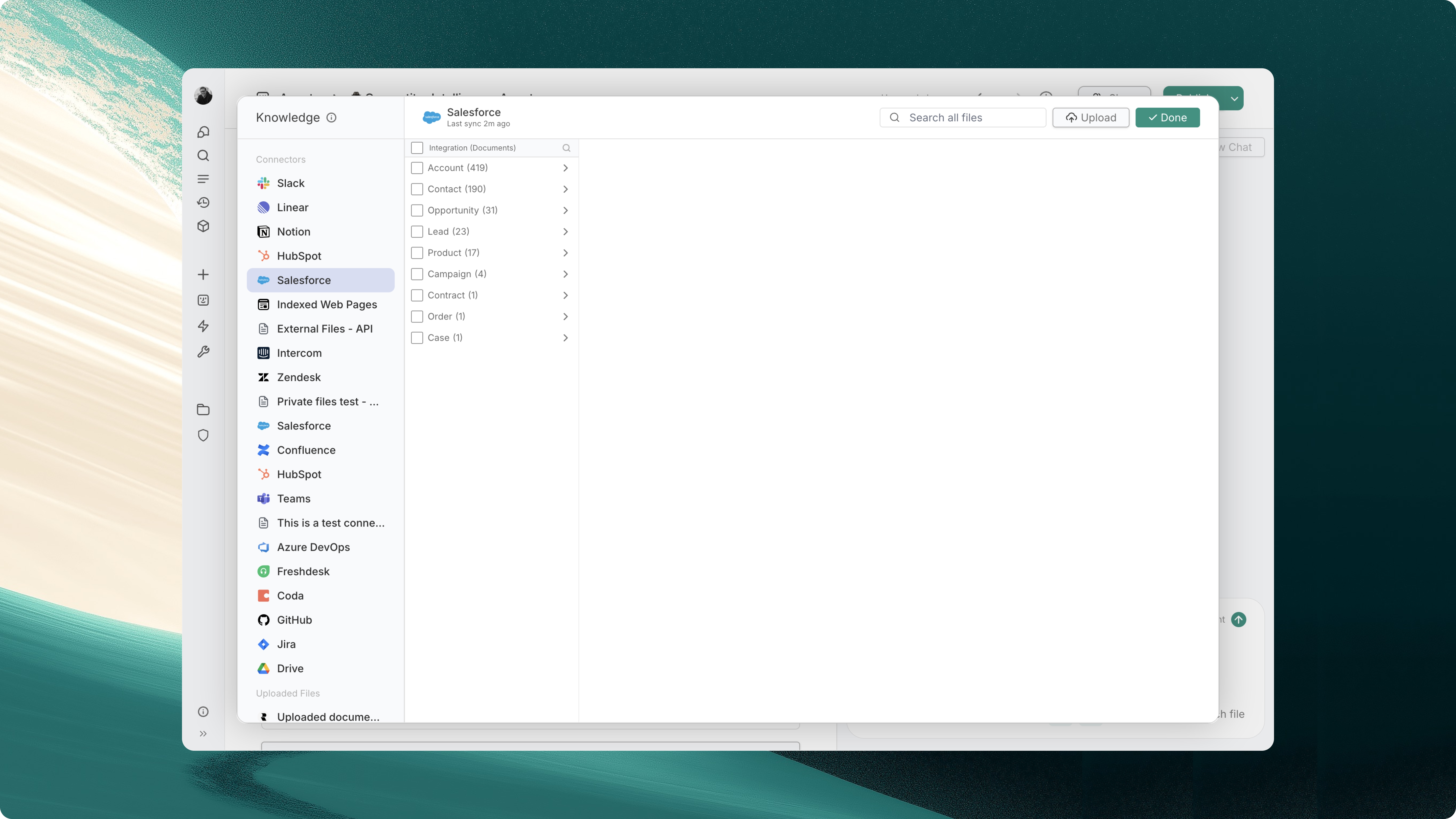

2. Knowledge - Train on your business context

Curating available knowledge ensures the agent operates with the right business context and is able to perform its tasks accurately. Choose the specific documents and folders your agent can access and search when executing your instructions. You can also upload separate files for the agent and enable it to perform web searches.

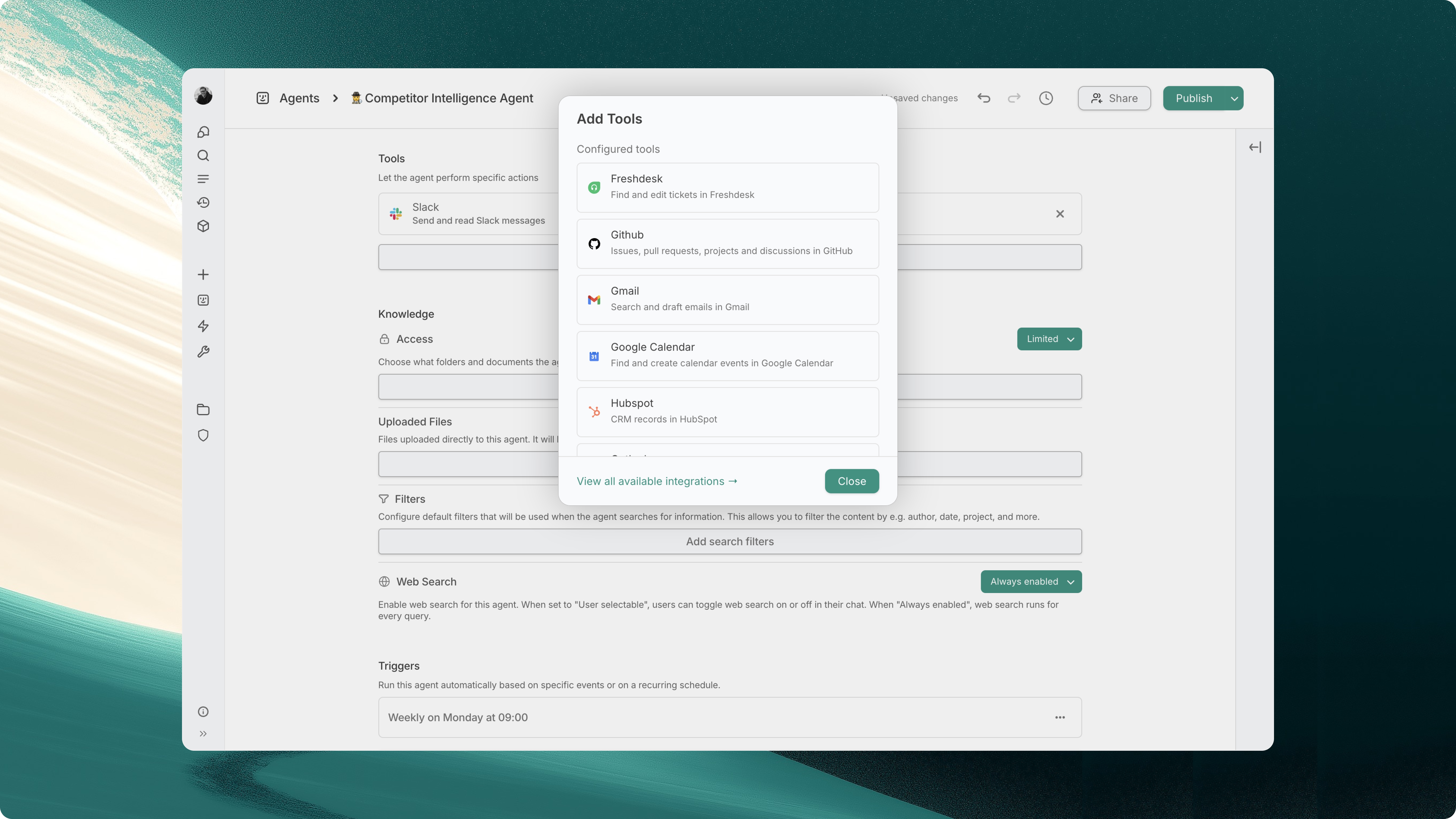

3. Tools - Let your agents take action

Tools allow agents to take actions across external applications, such as updating a CRM, drafting an email, or sending you a Slack message. With tools, agents can automate complex, multi-step workflows the same way a human would.

Realm Agents currently support:

- HubSpot

- Salesforce

- Gmail & Outlook

- Google Calendar & Outlook Calendar

- Slack

- Freshdesk

- GitHub

- MCP Servers

We are continuously working to add more tools, and if your team relies on a tool that our agents don’t yet support, please don’t hesitate to get in touch.

4. Workflows – Automate based on events or schedule

Workflows turn agents into proactive collaborators that can act on your behalf, automatically, 24/7.

You can set up agents that are triggered by:

- Events – Launch your agent when something happens in your connected systems; when a new website visitor appears in a Slack channel, a HubSpot deal changes stage, or a support ticket is created in Freshdesk.

- Schedules – Run your agent on a recurring schedule; every Monday morning, daily at 6 PM, or on the first day of each month.

Example use cases from our own team:

- Our Competitor Intelligence Agent runs every Monday morning to generate a weekly briefing.

- Our Demo Request Agent activates whenever a new message appears in the #demo_requests Slack channel and automatically qualifies the prospect.

6. Test and deploy

Before activating your agent, run it manually to ensure it follows your instructions correctly and produces the expected results. Once verified, activate your agent to start automating your workflow according to the triggers you've configured.

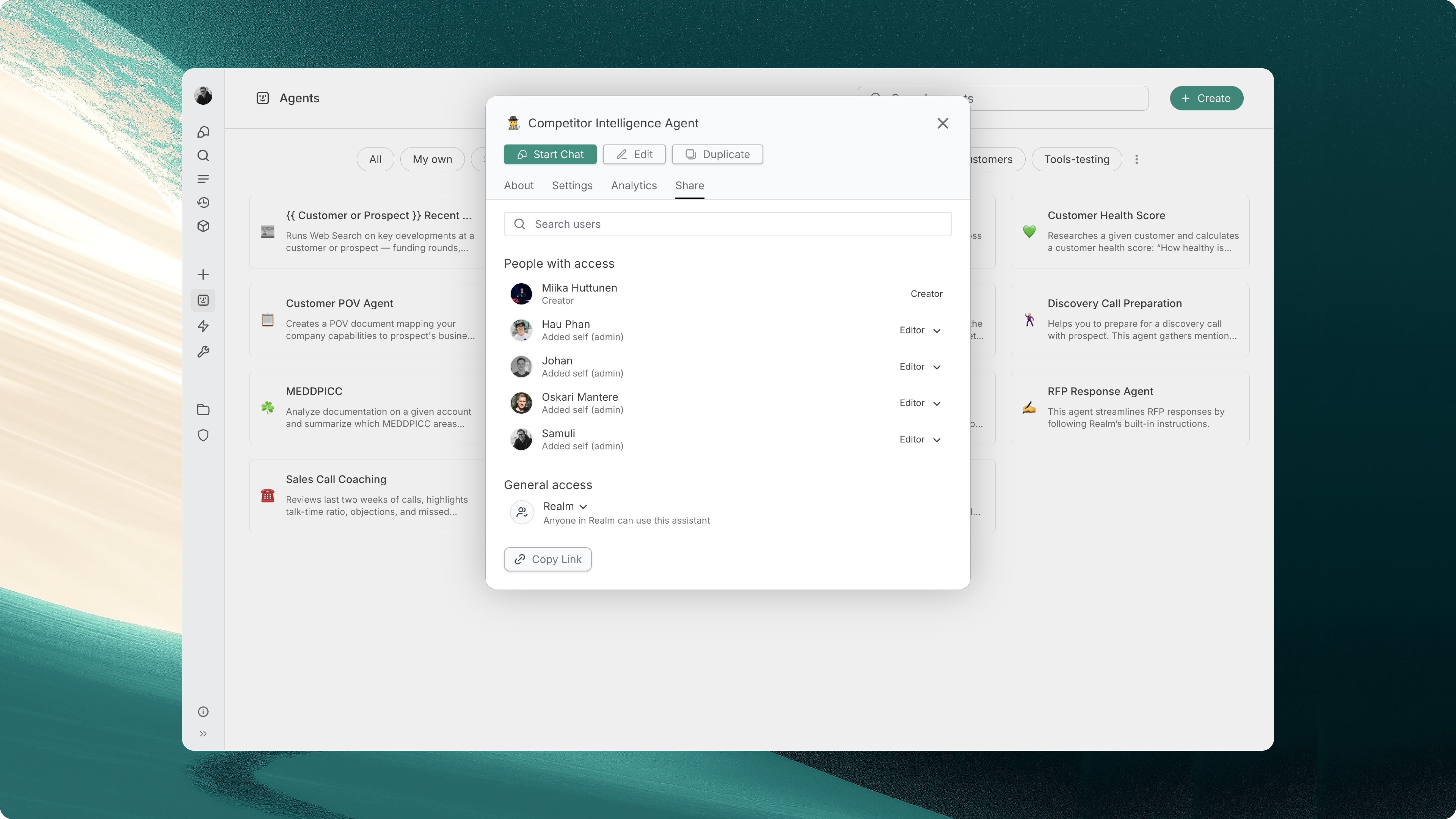

7. Share with your team

You can share your agent with colleagues, building a library of specialized agents enabling your organization to automate GTM workflows at scale.

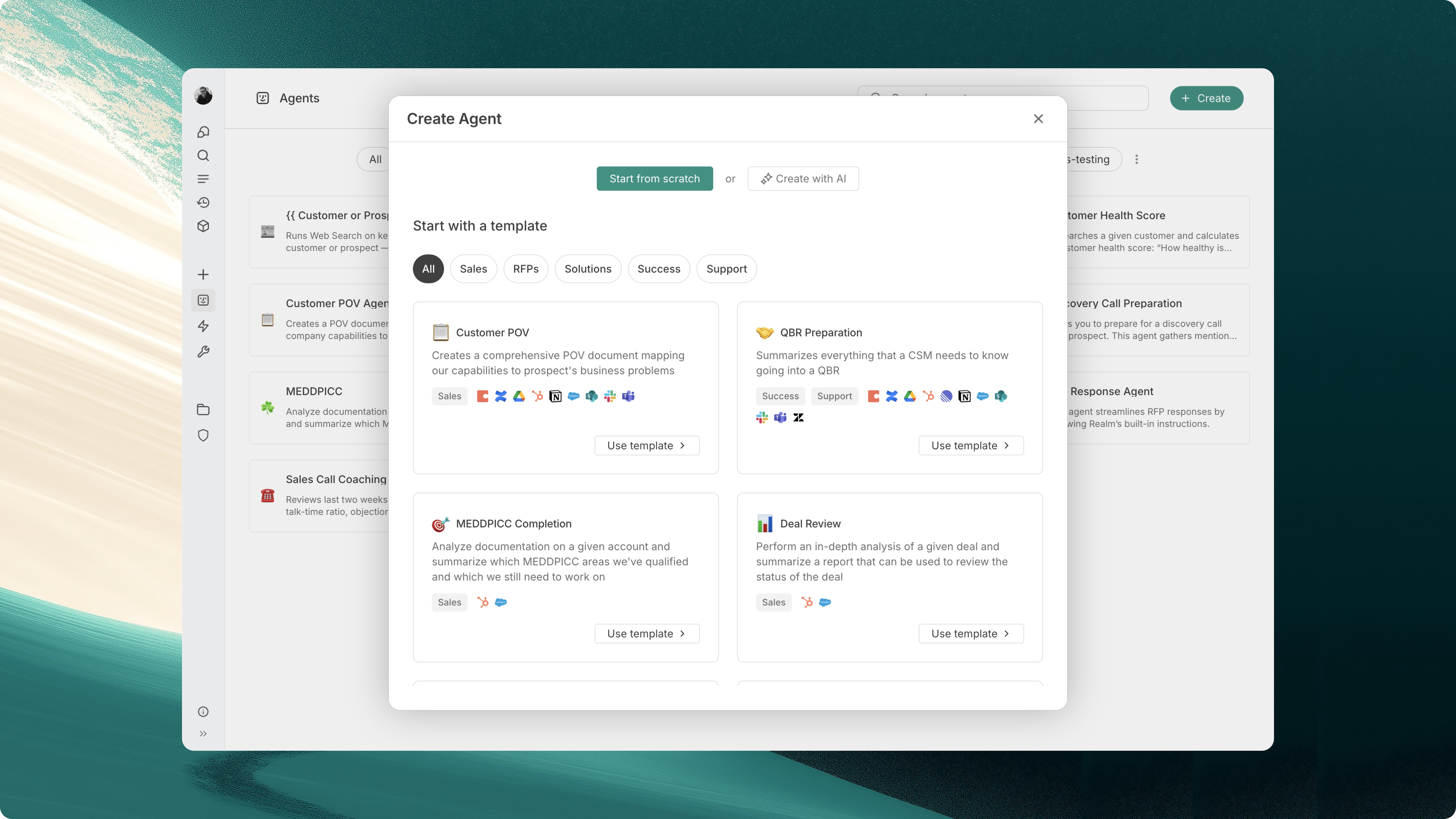

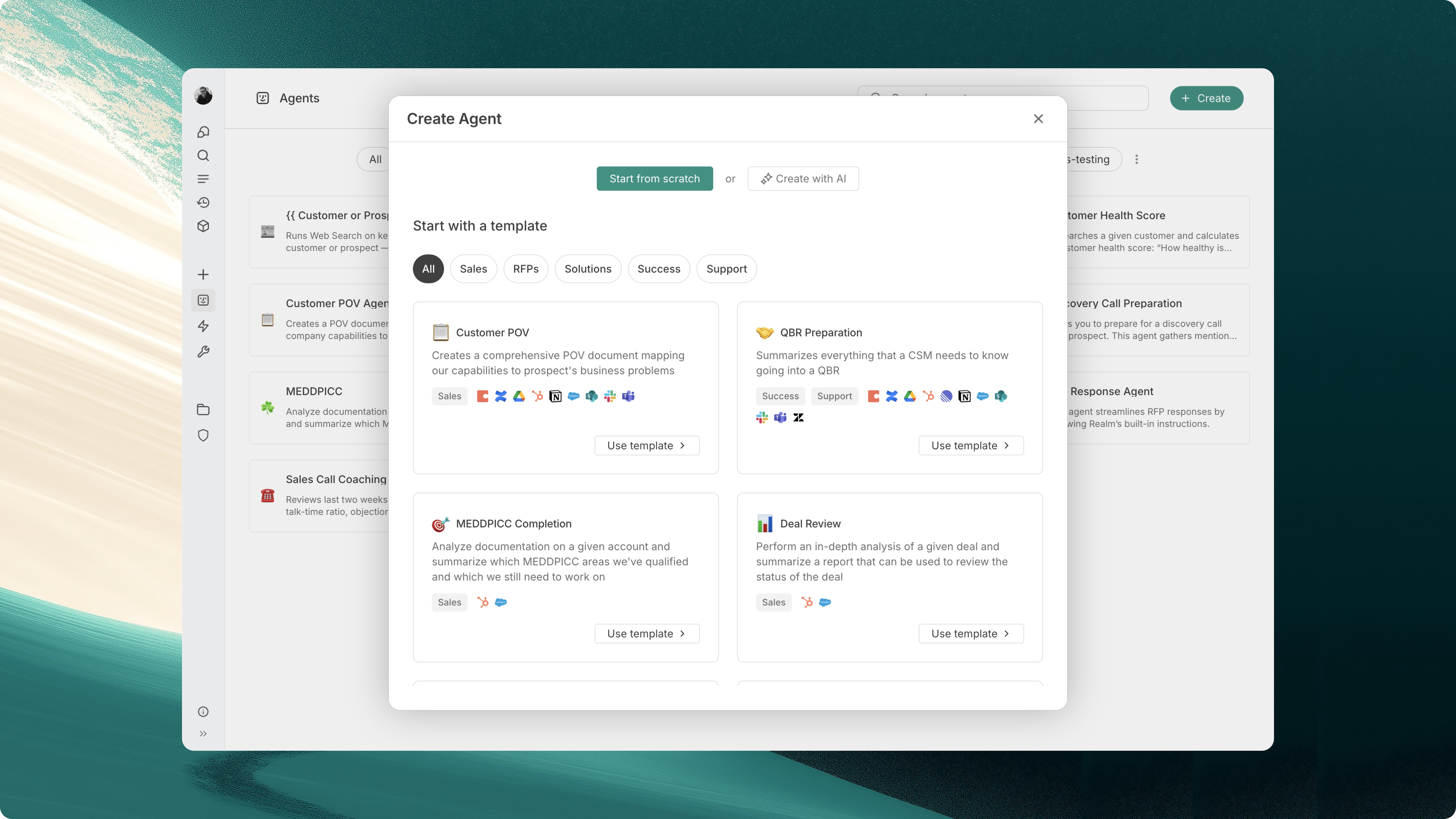

Get started in minutes with agent templates

Don't want to build from scratch? Realm offers a library of ready-to-use agent templates designed for high-leverage use cases across Sales, Customer Success, Presales, and Support. You can duplicate any template, customize it for your needs, and deploy it within minutes.

Start building your GTM AI workforce today

Realm Agents are designed to augment your team, not replace them. By automating repetitive and time-consuming tasks like researching prospects, updating CRM fields, or preparing meeting briefings, our agents free your team to focus on high-impact work: building relationships, strategic thinking, and closing deals.

The best part? With Realm, getting started with AI agent takes minutes, not months. No coding. No complex integrations. Just clear instructions written in plain English, connected to your company knowledge and tools your team already relies on.

Ready to scale your GTM with automated workflows? Book a demo to start building your first AI agent today.

.avif)